论文解读 | Graph-Guided Reasoning for Multi-Hop Question Answering in Large Language Models

Title: Graph-Guided Reasoning for Multi-Hop Question Answering in Large Language Models

Institution: Korea University, Amazon Alexa AI

Authors: Jinyoung Park, Ameen Patel, Omar Zia Khan, Hyunwoo J. Kim, Joo-Kyung Kim

Arxiv Link: https://arxiv.org/abs/2311.09762

Code Link: None

Date: 2023.11.16

Abstract: Chain-of-Thought (CoT) prompting has boosted the multi-step reasoning capabilities of Large Language Models (LLMs) by generating a series of rationales before the final answer. This paper analyzes the reasoning paths generated by CoT and identifies two issues in multi-step reasoning: (i) Generating rationales irrelevant to the question, (ii) Unable to compose subquestions or queries for generating/retrieving all the relevant information. To address these issues, the authors propose a graph-guided CoT prompting method, which guides the LLMs to reach the correct answer with graph representation/verification steps. This involves leveraging LLMs to construct a “question/rationale graph” using knowledge extraction prompting given the initial question and the rationales generated in previous steps. Then, the graph verification step diagnoses the current rationale triplet by comparing it with the existing question/rationale graph to filter out irrelevant rationales and generate follow-up questions to obtain relevant information. Additionally, CoT paths that exclude the extracted graph information are generated to represent the context information missed from the graph extraction. The proposed graph-guided reasoning method shows superior performance compared to previous CoT prompting and its variants on multi-hop question answering benchmark datasets.

介绍

提出了一种基于大模型的图引导的面向多步推理问题的推理方式。

本文的主要贡献有两点

-

提出上述推理方式

-

提出允许变量定义的用于知识三元组提取的上下文学习方法

基于大模型的图引导的面向多步推理问题的推理方式

该方法分为四个步骤

-

生成问题图

-

问题分解,生成中间子问题

-

回答子问题

-

验证中间逻辑推理

问题图

问题图通过从问题中提取三元组构成。所谓的基于图,本质上就是将问题转换成符号推理过程构成图的一部分。

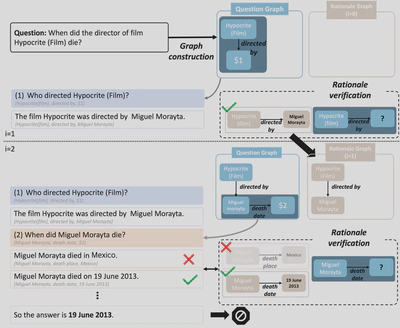

举例来讲,对于问题“When did the director of film Hypocrite (Film) die?”,可被转换为两个子问题作为图中的两个边“(‘Hypocrite(Film)’, directed by, $1),“($1, death date, $2)”。

文章在此处的创新点在于,以类似于1st-order logic (FOL)的形式将形式逻辑引入了推理过程。在这个过程中变量($1,$2)只能作为个体,而不能是命题或其他函数。

作者团队将变量实体引入是为了解决wh-question。

“Wh-questions” are a type of question in English that begin with “wh-” words, such as “who,” “what,” “where,” “when,” “why,” and “how” (though “how” does not start with “wh,” it is grouped with them functionally).

中间子问题生成

与其他成果相比,本文的中间子问题生成的特点主要在于本文的子问题生成是在图的引导下进行的。

具体而言,本文过滤掉那些主体和客体都只是变量或实体的问题三元组,这意味着此过程只关注由实体主体和变量客体或变量主体和实体客体组成的三元组。

这很好理解,对于三元组(variable1, relationship, variable2)和三元组(entity1, relationship, entity2)已经没有进一步分解的意义。

回答子问题

相比于原问题,子问题更短更简单,因此更容易产生正确回答。由于大模型易产生幻觉或大模型没有在指定的文档上进行过训练,可能会导致错误的输出,因此可以考虑使用RAG提高回答的准确性。

验证中间逻辑推理

通过验证回答是否与问题图对齐来验证生成的推理三元组是否合格。

具体而言,三元组的至少两个组件在推理三元组和问题图三元组之间需要相同,则我们接受与匹配三元组对应的推理,如果相应的三元组与问题图中的任何三元组都不匹配,则拒绝它。

例如,给定推理三元组(‘伪君子(电影)’,导演,‘Miguel Morayta’)和问题图(‘伪君子(电影)’,导演,$1),($1,死亡日期,$2)。由于问题图包含三元组(‘伪君子(电影)’,导演,$1),其中主体是‘伪君子(电影)’且关系是导演,因此接受推理。

具一个反例,例如,问题图中没有与推理三元组(‘Miguel Morayta’,死亡地点,‘墨西哥’)匹配的三元组。因此被拒绝。

实验

Setup

Dataset :

-

2WikiMultihopQA consists of 2-hop complex questions requiring the composition or comparison.

-

MuSiQue is a more challenging dataset where the problems include 2 to 4 hop questions that can be decomposed into simpler questions.

-

Bamboogle is a dataset consisting of 125 two-hop questions where the supporting evidence is from Wikipedia.

Models :

-

Llama-2 13B

-

Llama-2 70B

Result

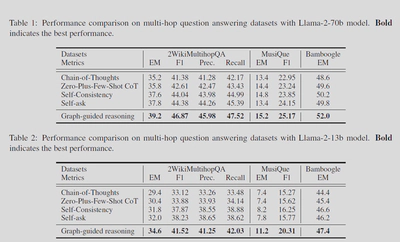

上图展示了部分实验结果,与各个Baseline相比,本文方法获得了最好的performance。