Translating Words to Worlds: Zero-Shot Synthesis of 3D Terrain from Textual Descriptions Using Large Language Models

Abstract

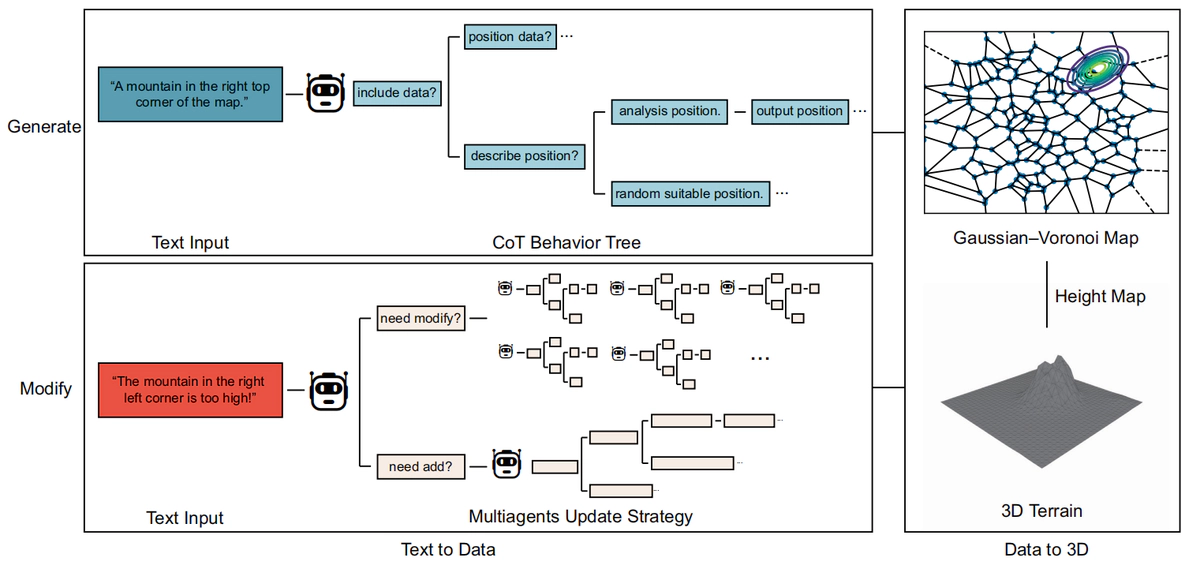

The current research on text-guided 3D synthesis predominantly utilizes complex diffusion models, posing significant challenges in tasks like terrain generation. This study ventures into the direct synthesis of text-to-3D terrain in a zero-shot fashion, circumventing the need for diffusion models. By exploiting the large language model’s inherent spatial awareness, we innovatively formulate a method to update existing 3D models through text, thereby enhancing their accuracy. Specifically, we introduce a Gaussian–Voronoi map data structure that converts simplistic map summaries into detailed terrain heightmaps. Employing a chain-of-thought behavior tree approach, which combines action chains and thought trees, the model is guided to analyze a variety of textual inputs and extract relevant terrain data, effectively bridging the gap between textual descriptions and 3D models. Furthermore, we develop a text–terrain re-editing technique utilizing multiagent reasoning, allowing for the dynamic update of the terrain’s representational structure. Our experimental results indicate that this method proficiently interprets the spatial information embedded in the text and generates controllable 3D terrains with superior visual quality.