- First Comprehensive Analysis: Unraveling the impact of graph description order on LLM performance.

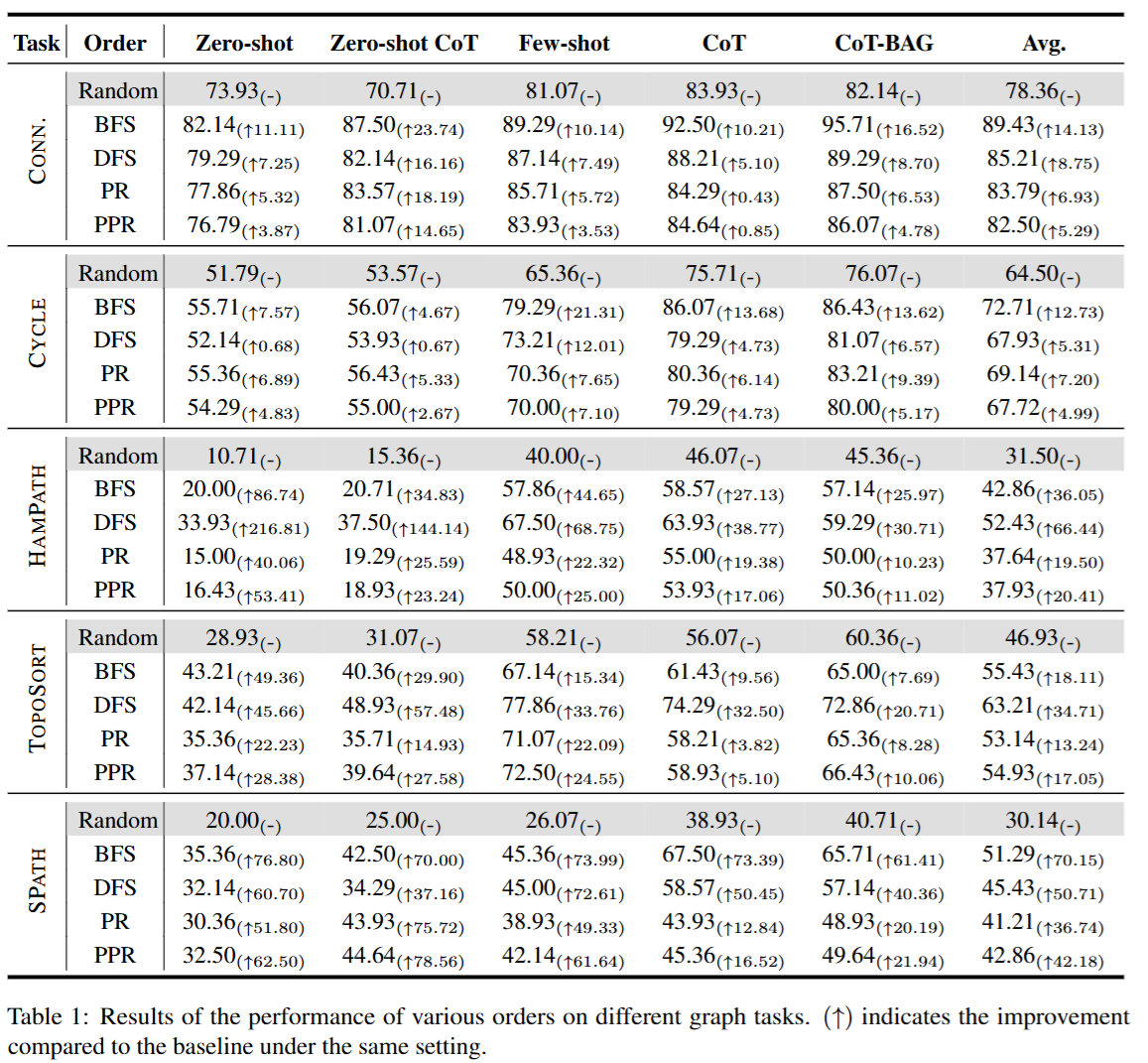

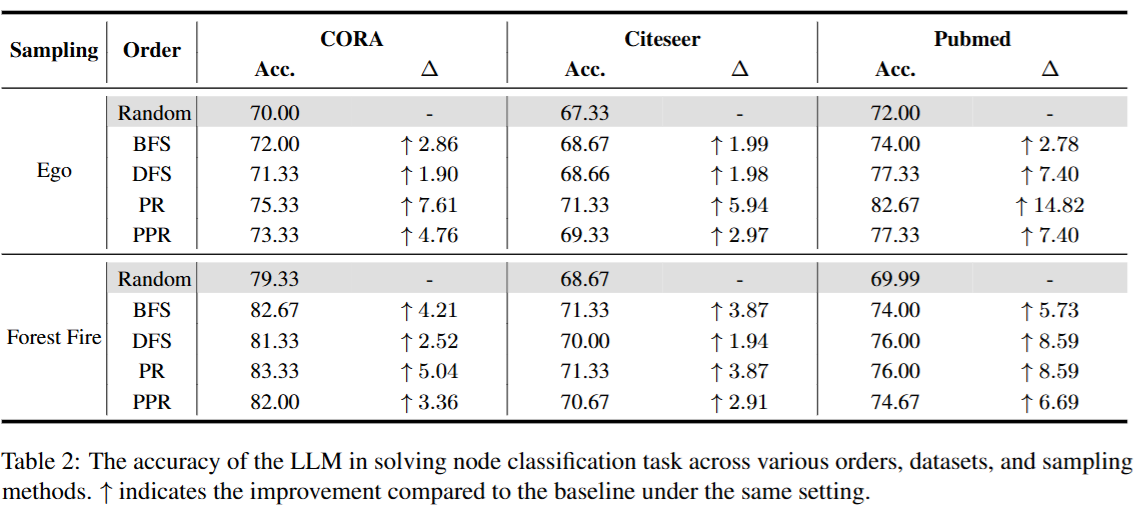

- Significant Performance Gains: Ordered descriptions (BFS, DFS, PR, and PPR) yield marked improvements across diverse graph tasks.

- GraphDO Dataset: A novel benchmark dataset that propels research in graph reasoning with LLMs.

Can Graph Descriptive Order Affect Solving Graph Problems with LLMs?

Abstract

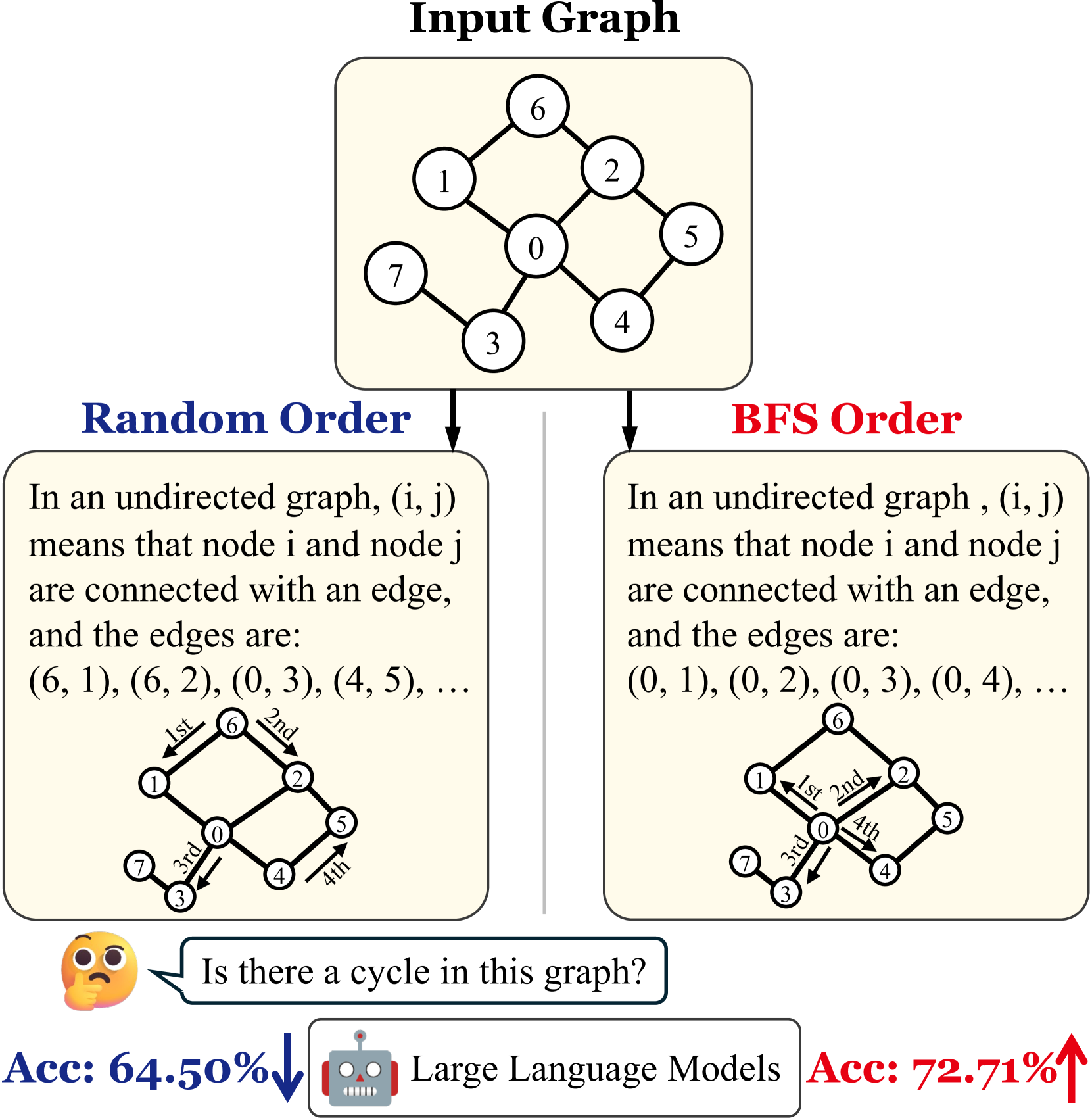

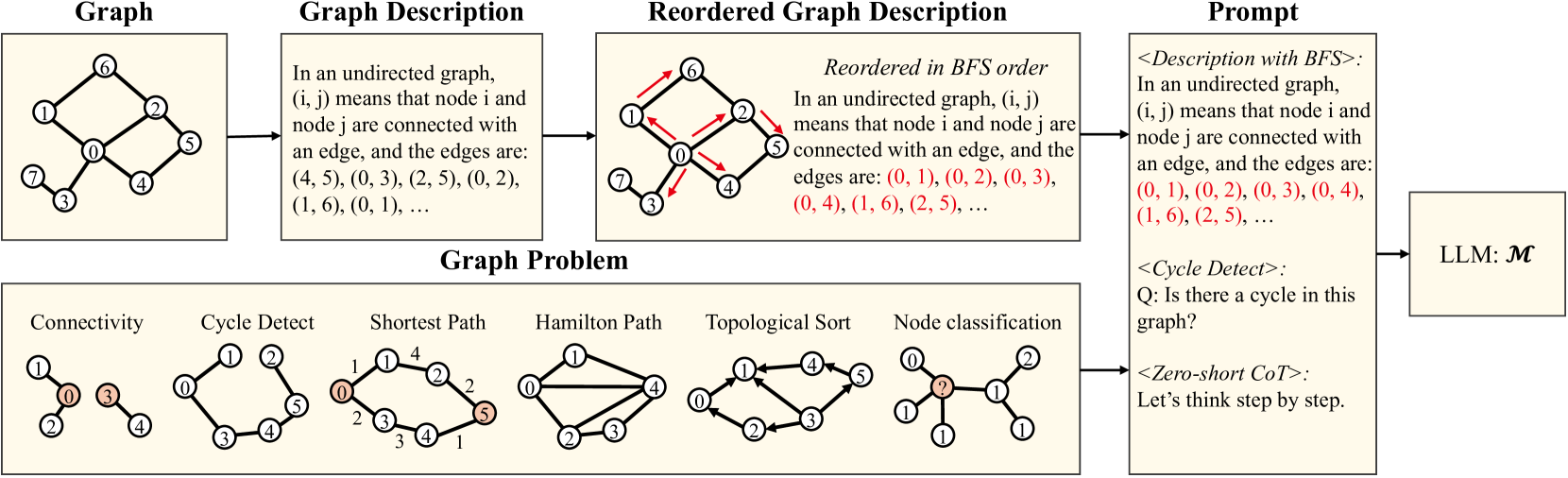

Large language models (LLMs) have achieved significant success in reasoning tasks, including mathematical reasoning and logical deduction. Among these reasoning tasks, graph problems stand out due to their complexity and unique structural characteristics, attracting considerable attention from researchers. Previous studies have explored LLMs’ graph reasoning abilities through various techniques, such as different encoding methods for graph structures and the use of carefully designed prompts. However, a critical factor has been mostly overlooked: the prompt sequential order in which graph descriptions are presented to the models. In this study, we present the first comprehensive analysis of how the order of graph descriptions impacts LLM performance. Specifically, we comprehensively evaluate four graph description orders across six graph problems using six mainstream LLMs. The results reveal that: (1) ordered graph descriptions significantly improve LLMs’ comprehension of graph structures; (2) the robustness of LLMs to graph description order varies across different tasks; and (3) the impact of graph order on performance is closely related to the inherent characteristics of tasks. Our study provides a critical advancement in the application of LLMs for solving graph-related problems, paving the way for future research to optimize model performance through strategic graph description ordering.

BibTeX

@misc{ge2024graphdescriptiveorderaffect,

title={Can Graph Descriptive Order Affect Solving Graph Problems with LLMs?},

author={Yuyao Ge and Shenghua Liu and Baolong Bi and Yiwei Wang and Lingrui Mei and Wenjie Feng and Lizhe Chen and Xueqi Cheng},

year={2024},

eprint={2402.07140},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2402.07140},

}